- Portal Login

-

-

SolutionsBack

Solutions

-

For Payers

Simplify complex processes and improve payer-provider collaboration.

BackFor Payers

-

The Availity Platform for Payers

Simplify complex processes and improve payer-provider collaboration.

-

Network Connectivity for Payers

Connect to the most providers and HIT partners nationwide.

-

Multi-Payer Portal

Improve collaboration with your provider network by automating core workflows.

-

Intelligent Utilization Management

Help ensure the right care at the right time by transforming the prior authorization process.

-

Payment Accuracy

Reduce administrative waste by shifting edits early in the claim lifecycle.

-

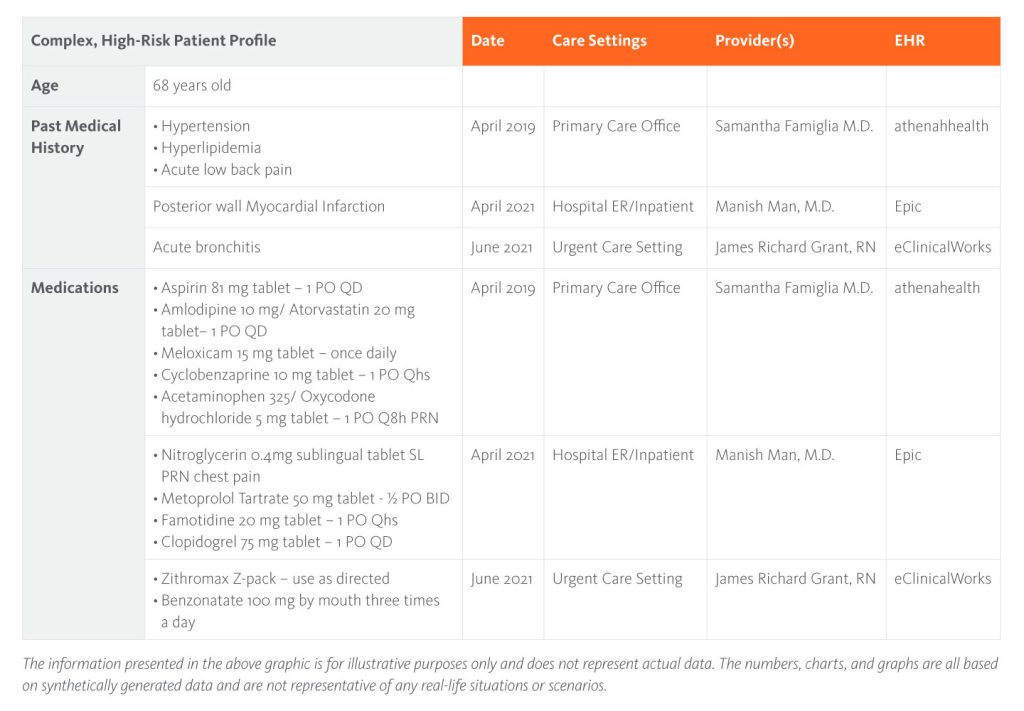

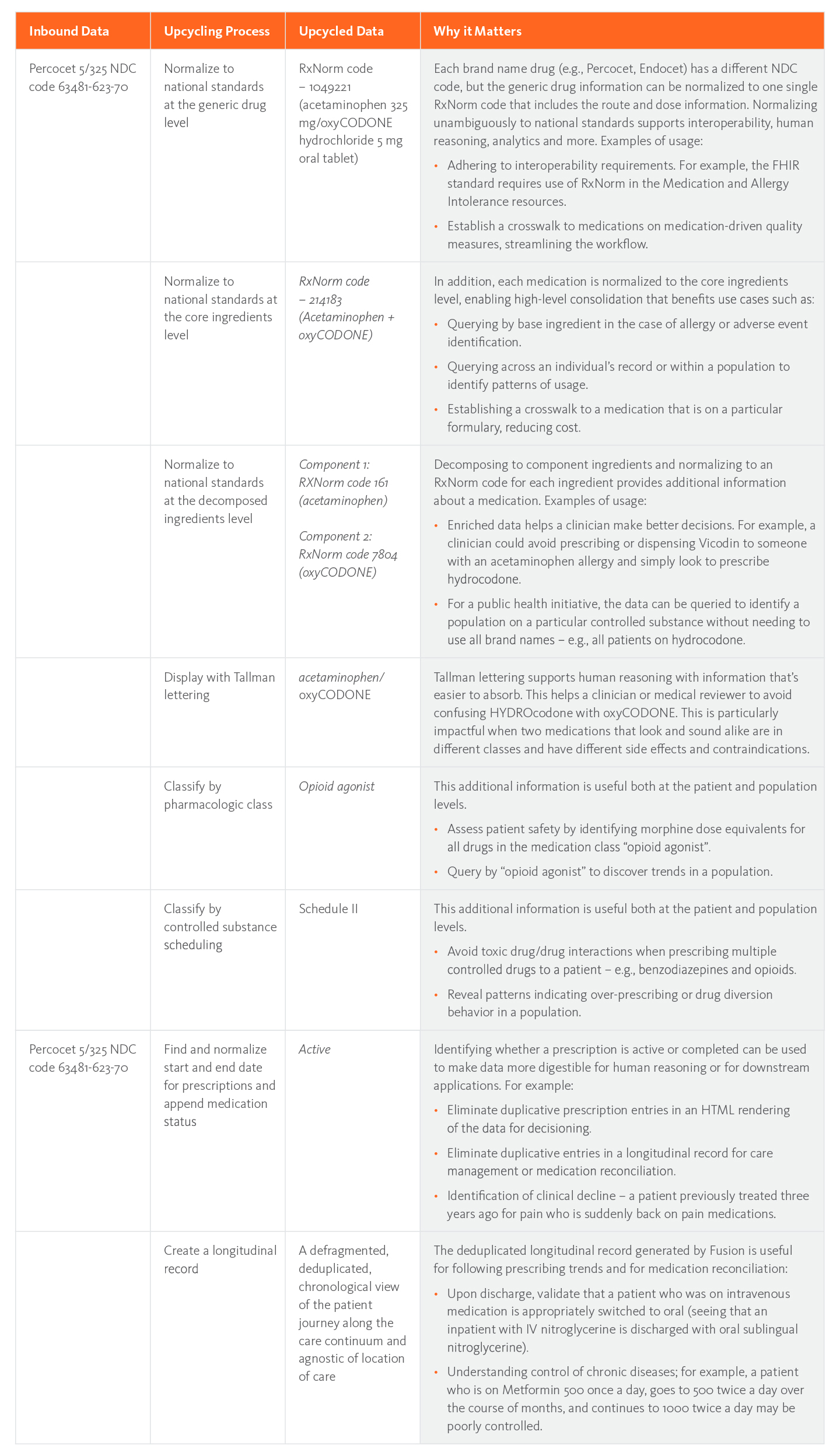

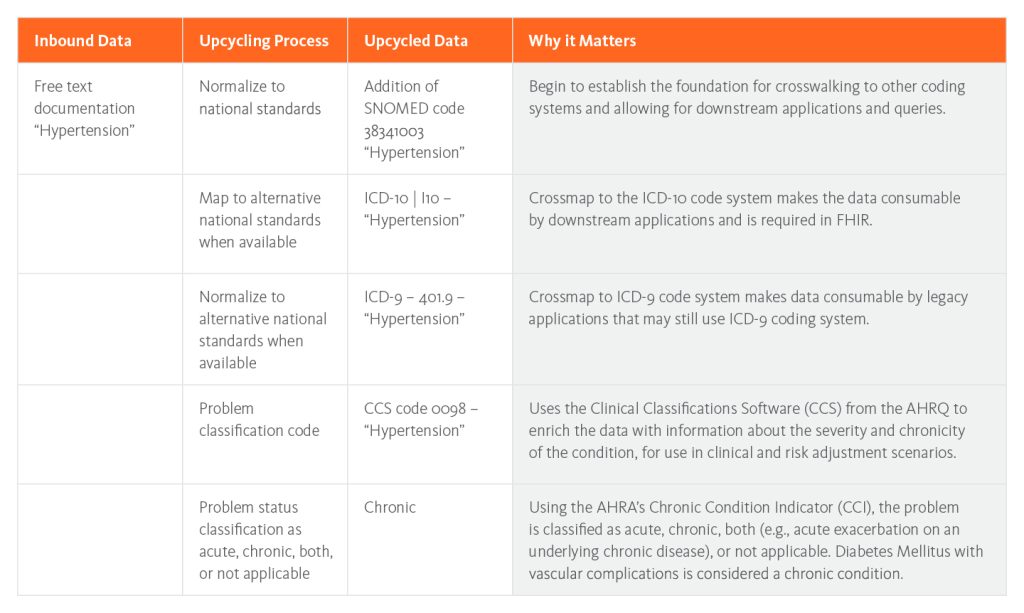

Clinical Data Solutions

Make clinical data work for you by streamlining acquisition, improving quality, and optimizing workflows.

-

Provider Lifecycle Solutions

Improve the quality of provider data for use across the enterprise.

-

Digital Correspondence

Drive cost out of provider communications by digitizing paper correspondence.

-

The Availity Platform for Payers

-

For Providers

Streamline workflows, reduce denials, and ensure accurate payments.

BackFor Providers

-

The Availity Platform for Providers

Streamline workflows, reduce denials, and ensure accurate payments.

-

Network Connectivity for Providers

Improve revenue cycle performance with fast and secure connections to payers nationwide.

- Revenue Cycle Management

-

The Availity Platform for Providers

-

For HITs

Seamlessly deliver complete and accurate healthcare information.

BackFor HITs

-

The Availity Platform for HITs

Seamlessly deliver complete and accurate healthcare information.

-

Network connectivity for HITs

Scale your organization by connecting with payers and providers nationwide.

-

API Marketplace

Access our portfolio of robust and compliant API connections.

-

The Availity Platform for HITs

-

For Payers

-

ResourcesBack

Resources

-

Insights

Back

Insights

-

Blog

Get the latest industry insights.

-

Customer Success Stories

See success stories from our customers.

- Availity Rapid Recovery

- Insourcing Utilization Management

- Infographics

-

Blog

- Partner Resources

- Payer Lists

-

Insights

-

About

-

ConnectBack

Connect

-

Locations

See where we're located.

-

Contact Sales

Find the best way to get in touch.

-

Customer Support

Get in touch with customer support.

-

Events

Learn about upcoming industry events.

-

Locations

-